Robotics Myth 1: Repeatability and Accuracy

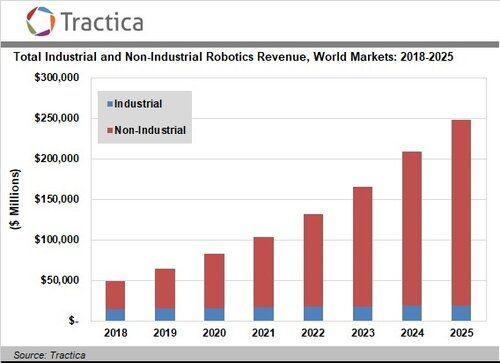

The global robotics market (estimated value at $39.72B in 2019) is expected to continue to blossom (hitting $248.5B by 2025). Various factors are driving this: the introduction of more sophisticated hardware at lower costs, the resurgence of “deep” learning and artificial intelligence technologies, and the growing challenges with the labor demanding more automation for throughput, safety, mass customization, and logistics.

Up to this point in the growing robotics market, we’ve seen much speculation, bombastic claims, and unfulfilled promises. Over these past years, these claims have led to a number of devastating robotics company closures either due to unsatisfactory product-market fit or romanticize underperforming technology, leaving many founders and investors learning what is myth and what is reality post mortem.

The purpose of this “Robotics Myths” blog series is to highlight some of these robotics myths, so that new founders and investors do not have to make the same mistakes, being wiser when informed by history. In addition, this blog aims to present the limitations of robotics today, shedding light to warehouse owners and individuals looking to automate, so they can make informed decisions when sourcing automation technology. Lastly, this blog series serves as a means to promote conversation, wherein us roboticists can freely discuss and clarify myths, in hopes of making the fundraising process, customer conversations, and customer expectations easier.

On Repeatability and Accuracy

Today, we are kick starting this blog series talking about repeatability. We’ve all had that one conversation with a sales representative from your favorite robot vendor claiming their robot is far more superior than their competitors due to 1) the robot’s reach and extra degree of freedom (DOF), 2) the robot’s speed and payload, or 3) the robot’s impeccable repeatability and accuracy.

To the first claim, of course, having additional reach and DOFs directly impacts the robot’s

kinematics and workspace. As a result, these factors influence the task’s feasibility (whether the robot can physically perform the task). To the second claim, the payload specifications are dependent on your task. The target robot should have sufficient payload to account for the tooling and picking parts. Clearly, the justification for robot speed is simple: faster robot speed means faster cycle times which in turn increases return on investment (ROI). However, the third claim that Robot X is better than Robot Y simply due to its repeatability is, in general, a bogus claim.

A good blog post discussing repeatability and accuracy by Robotiq.

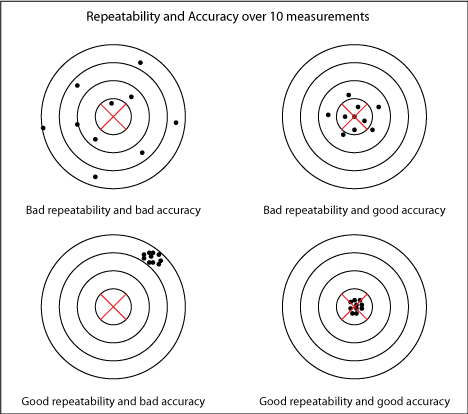

We define accuracy as the difference between the requested (desired) task and the obtained (result) task, whereas repeatability is a measure of the variance of all obtained tasks. To better understand repeatability and accuracy, we refer you to

this blog post. For the rest of this post, we will use repeatability and accuracy fairly interchangeably, sometimes simply referring to them as the robot tolerance, since off-the-shelf robots tend to have repeatability and accuracy measures within the same order of magnitude and the argument presented in this blog is applicable to both.

What is YOUR Repeatability?

First, let’s perform a quick exercise to better visualize and understand these concepts. Grab a piece of paper and a marker and draw an “X” in the center of the paper. Now position your hand with the marker about a foot away from the “X”, close your eyes, and try to point at the “X”. Repeat this 10 or 25 times. You may open and close your eyes between each attempt. Notice how far off the dots (results) are compared to the “X” (target). This measure is your accuracy. The spread or the variance of the dots is your repeatability.

If your results are similar to mine, we notice that our kinematics as humans are not very good. The accuracy (difference) and repeatability (variance) of the dots against the true target (the “X”) and to each other are large. This exercise is comparable to how we test and control robots today. Robots are given a target within their workspace and rely on their precise kinematics to achieve that target. Even in the case of introducing vision systems and sophisticated (“deep”) perception algorithms, in most conditions, the system localizes (determines precisely) where the target is first. Then, the system computes a goal (or a path) for the robot to achieve this target. In robotics, we call this the

sense-plan-act paradigm (or sometimes called

OODA loop), wherein robots perform each of these steps in series and essentially “closes their eyes” during their planning and acting phases.

Compared to humans however, robots are multiple orders of magnitude more accurate and repeatable kinematically. Pull up the data sheet for your favorite robot and you’ll notice that their repeatability measures are quite often on the order of 0.1mm or better (a measure describing the difference between the dots to each other and to the “X”). I would argue that humans are, at best, cm-level precision kinematic systems. But if humans are so “erroneous” how do we ever accomplish precise manual tasks? We will cover this question later in this blog. But first, let’s revisit repeatability for robots.

Repeatability is a Function of Your Task

Remember that the premise here is the claim that Robot X is better than Robot Y simply due to its repeatability (and/or accuracy) is, in general, a bogus claim. Of course, you may be thinking, there exists a subset of tasks that indeed, do require high levels of accuracy and repeatability (e.g. precise assembly, insertions, welding, and Q&A). These tasks can be categorized generally under

low mix and extremely high volume throughput processes. For these tasks, the robot is set up, programmed, calibrated for a single task and is expected to execute without failure for millions of cycles with little to no process change.

The tight coordination of multiple robots tasked to perform car assembly in a factory requires fine precision and high repeatability (Image Credit)

Packing Amazon delivery boxes is one of many tasks humans currently do that do not require extremely high repeatability (Image from CBC)

Now, the majority of tasks, like material handling, pick and pack, and fulfillment, do not require the micron-level (or even mils-level) precision that these high fidelity processes require. A good example is to think about your latest Amazon order: the contents within the box are not necessarily packed into the box with micron-level precision, nor do you care. This particular task does not require that your Amazon item be placed precisely 5.289cm away from the top right corner of the box. Instead, the packer places the objects loosely into the box at some relative positions such that the contents fit into the container (i.e. the box). Another example of this is when humans manually place objects into particular partitions, blister packs, or sections. This also does not require such fine levels of detail. In fact, the bulk of “automatable tasks” fall under this category: loose place parametrization with some variability and some acceptable margin of error.

The necessary repeatability is then a function of the task itself. How much repeatability is required is outlined by the acceptable margin of error that constitutes task completion and success. For example, a task like assembly might actually require micron-level precision with parts that have to be inserted into one another with sufficiently tight tolerances. Whereas, a task like packing an Amazon box can be done “fairly erroneously” under the conditions that the objects are not damaged, they end up in the box, and they do not constrain the box from being closed properly.

How Much Repeatability Do We Need?

In this case, success need not be defined by having zero error or having perfect repeatability. Rather, success is defined as whether the agent (a human or robot) performed an action that resulted within the task specifications and tolerances. In other words, success describes whether the tolerance of the task is met.

In robotics, we call this the

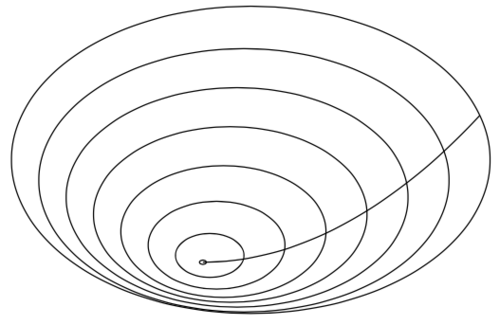

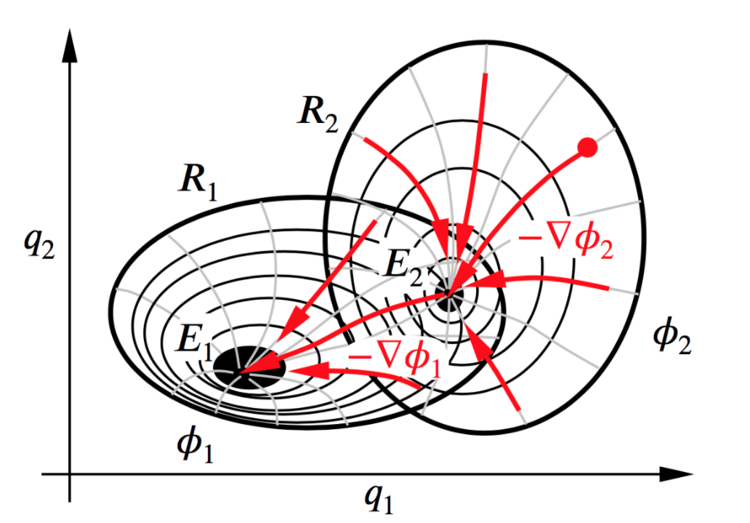

region of attraction or basin of convergence (or “funnels”). We can think of this as how much error can the task (and system) tolerate before success is no longer guaranteed. In the case of the manual packing of objects into their individual partitions (in the above image), the idealized placement is to place the object perfectly vertical into the compartment. However, the task itself can still be successful if the human places the objects 5 degrees from vertical. Similarly 10 or 25 degrees away from the idealized conditions should still result in the object falling into the correct compartment due to gravity and the object’s contact with the partition walls. We would describe this task as having a fairly large basin of convergence (of about, say, 25 degrees).

The basin of convergence of a task can be described by how far away from the idealized conditions can we go before success is no longer guaranteed. Visually, this can be illustrated by a funnel over the ideal conditions, in which states that are “sufficiently close” to ideal will still converged to success. Simply put, imagine a marble placed in the interior of the funnel. It will always converge towards the center.

Since repeatability is a function of the tasks itself, a robot must have sufficient repeatability to reliably fall into the basin of convergence of the task. So if the task has an acceptable margin of error of +/- 2.5cm and 25 degrees (like the example packing task above), then minimally, the robot should have a repeatability of +/- 1cm or so, such that if the robot is to target the ideal conditions, any perturbations or errors away from ideal will still converge to success.

Our Robots Are Already Precise Enough

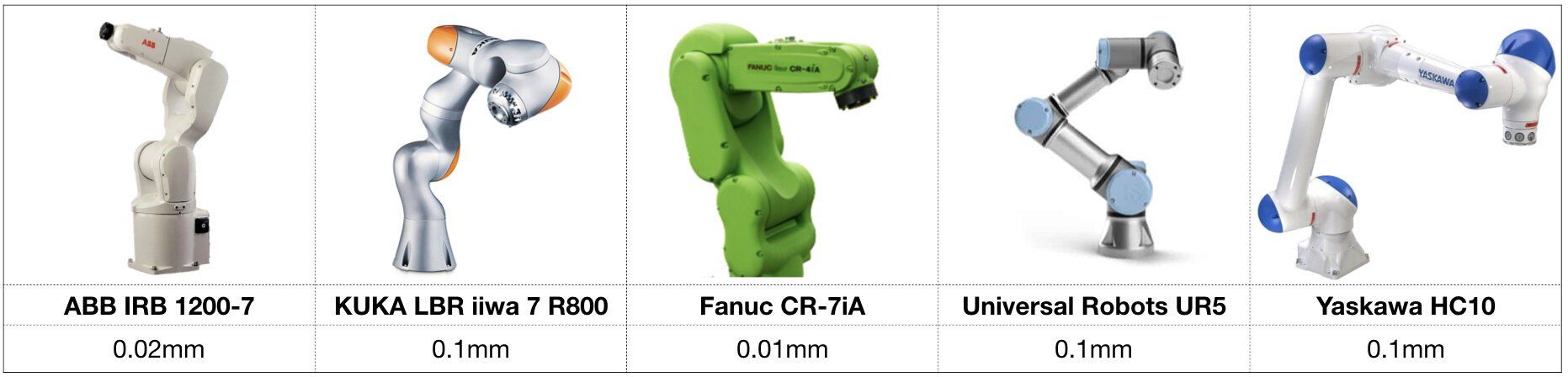

Given our task’s margin of error, let’s investigate some robot arms currently in the market and their reported repeatability (according to their public data sheets). Our image here is by no means a complete listing, but notice that every single robot has repeatability measures less than a tenth of a millimeter!

The repeatability of various robot arms currently in the market are ALL sufficient for automating manipulation tasks.

The reported repeatability measures are well within the basin of convergence of the task. Compared to the tolerance of the task (+/- 2.5cm), the repeatability of each of these systems (at worst 0.1mm) is negligible. To put things into perspective, the magnitude difference is identical to driving for 15 minutes (i.e. a distance of 15 miles) and comparing that to the radius of the earth (~4000 miles). The only reason you see any significance between the task and robot tolerances (to the right) is that this plot is artificially inserting white space for visualization purposes.

Robotics and automation is an integrated science. It relies on the tight integration of hardware, control, planning, and vision systems. The takeaway here is that perception (vision) and control errors will far outweigh the precision of the robot (assuming <0.1mm), thus making this robot differentiation metric pointless.

Of course, there are other specifications to account for when selecting your robot for your task (i.e. reachability, kinematics, payload, speed, safety, ease of use, etc), but in terms of repeatability alone, any of these robots are sufficient. In fact, it does not matter which of these robots you select. They are all sufficiently precise for our example task, being orders of magnitudes less than the requirements described by the basin of convergence. Unless your task requires a peg-in-hole insertion of parts that have tolerances of several mils, the repeatability of these robots have almost no contribution. What is more important is how you control your robot, to achieve task success. In other words, the main contributor is how you control (and drive) the robot towards the basin of convergence specified by the properties of the task. The magnitude of your control paradigm and vision errors will far outweigh the magnitude of the robot errors (e.g. repeatability and accuracy).

Human Cm-Level Repeatability Still Prevails

In this blog, we had argued that humans are at best cm-level In this blog, we had argued that humans are at best cm-level precision systems. But if humans are so “erroneous” then how do we ever accomplish any manual tasks that require tight tolerances like assembly? And why do we still see humans doing so many precise manual tasks?

Revisiting our “target the X” example previously, if you attempt to do this task with your eyes continuously open, you’ll notice that you’re always going to be hitting the center of the “X”!

Humans may have worse kinematic repeatability, but we have a larger basin of convergence when we interact with the environment and the task via sensing and control. The reason is that when we perform tasks, we do not rely on kinematics alone, but instead leverage several sensory modalities to collectively provide feedback during execution of the task. We continuously leverage our vision during execution (see visual servoing), exploit contact and physics with the environment, and reason in both our audio and haptic channels. By collectively exploiting all our sensory channels, we in essence “grow” our basin of convergence. You can visually think of this as tiling a number of these regions of attractions (“funnels”) together to aggregate a much larger capture radius, where each of these funnels converges the state into the attraction of another funnel continuously towards the goal or success.

Robots are not quite there yet, in terms of leveraging a number of their sensory feedback harmoniously to achieve precise sensorimotor control. In many cases even, robots avoid the rich feedback (and contact dynamics) from the environment. A good example is that today, humans exploit contact whereas robots avoid contact.

Until robots can leverage multimodal sensory input (akin to humans), the repeatability of the robot should be considered for success.The measure of that requirement is proportional to the task at hand and the sophistication of control and feedback inherent in the system. Simply put, lower tolerance tasks require lower tolerance robots and higher tolerance tasks require higher tolerance robots. In regards to control, no feedback and no sensing requires more repeatability and accuracy, while moderate amounts of sensing requires less repeatability and accuracy. Measures of accuracy and repeatability are things to consider, but what is even more important is

what you’re trying to automate,

how you use the system, and the magnitude of these tolerances in relation with one another.

Conclusion: Spec to Your Needs

If you’re a supply chain expert looking to purchase new robot hardware and maximize your investment, we encourage you to first evaluate your task and its acceptable margin of error to determine what repeatability specifications your system requires. It’s important to ask yourself how much repeatability does your task actually need? Chances are you'll find your task does not require micron-level precision or even mm-precision. Chances are you do not need to spend more on repeatability, but rather your largest ROI justifier is speed and installation ease.

Of course, as our algorithms and control paradigms continue to improve (as we design more sophisticated methods to exploit feedback), the larger the basin of convergence and acceptable margins of error your task (and robot) may tolerate. And as these innovations and technologies emerge, we’ll likely see less of a need and demand for extremely precise robot systems. Perhaps, that is what the late Rethink Robotics had envisioned all along (unfortunately witnessing the difficulty

merging complex control and elegant actuation)…But in due time, one day when our control paradigms reign supreme and hardware becomes a commodity, we’ll be able to offset these major hardware inaccuracies through software alone, paving ways to more and more capable automation. But until then, coupling

moderately good control algorithms and

sufficiently accurate robots is more than enough to get the job done.

About the Author

Jay M. Wong is an entrepreneur, roboticist, and co-founder of Southie Autonomy. He leads the engineering effort on Southie’s no-code flexible automation system which couples augmented reality and artificial intelligence to enable a spatial ease of use robot interface to humans.

Wong worked as a robotics scientist at

Draper and held visiting scientist roles at MIT and Harvard University, where he served as an autonomy expert on a large research project to mature autonomous mobile manipulation technology. He led Draper’s cloud robotics research initiative and development on the

award-winning robotics system. Prior, he was at NASA and UMass Amherst, his alma mater, where he was named a fellow and two-time

OAA awardee.